With all due respect, this sounds like a storage management issue, not a JavLuv issue.Another small update of the JavLibrary issue. Be careful also of your actress data, Id back up just incase. Ive had several that have created new actresses for movies and ive been baffled to why but its simply putting the scraped data the Javlibrary way of reversed as in: Akari Asagiri to Asagiri Akari.

If it happens and you think you've lost all your precious data on a actresses. Just put the name either way into javluv and just merge the blank one to the main one. I wouldn't delete the one thats wrong, dont seem to like that

JavLuv JAV Browser

- Thread starter TmpGuy

- Start date

-

Akiba-Online is sponsored by FileJoker.

FileJoker is a required filehost for all new posts and content replies in the Direct Downloads subforums.

Failure to include FileJoker links for Direct Download posts will result in deletion of your posts or worse.

For more information see this thread.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

With all due respect, this sounds like a storage management issue, not a JavLuv issue.

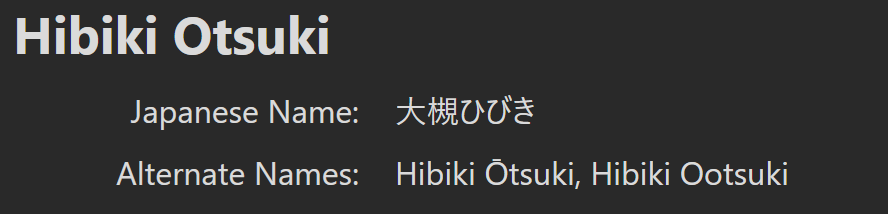

Actresses are notoriously difficult for JavLuv to manage, due to the nature of the Japanese language, and how there isn't really an exact English name for a given Japanese name. Add to this the issue that actresses sometimes change names throughout their careers (some have many aliases), two possible name orderings, and the problem grows even more tricky. Hibiki Otsuki is my favorite example, as there are no less than three valid ways to spell "Otsuki".

I attempt to alleviate this with fuzzy algorithms that employ a "closest match" in case of minor spelling differences, but I didn't want to introduce too many false positives. It works most of the time, but does sometimes require manual intervention.

If there's a name with no corresponding database entry, JavLuv will create one, or else it ends up having issues. If you delete it, it will just be recreated later. So, merging it is the correct answer, as this will also merge names such that the alternate name is preserved.

In theory, JavLuv should have dealt with name order, so long as the websites scraped from display the name consistently. So I'm not sure why that's occurring.

Considering how often I have to tell some people who use javlibrary as their info source when posting here that there are mistakes in the actresses name, I doubt the names are consistent across the different ones.

Actresses are notoriously difficult for JavLuv to manage, due to the nature of the Japanese language, and how there isn't really an exact English name for a given Japanese name. Add to this the issue that actresses sometimes change names throughout their careers (some have many aliases), two possible name orderings, and the problem grows even more tricky. Hibiki Otsuki is my favorite example, as there are no less than three valid ways to spell "Otsuki".

View attachment 3490961

I attempt to alleviate this with fuzzy algorithms that employ a "closest match" in case of minor spelling differences, but I didn't want to introduce too many false positives. It works most of the time, but does sometimes require manual intervention.

If there's a name with no corresponding database entry, JavLuv will create one, or else it ends up having issues. If you delete it, it will just be recreated later. So, merging it is the correct answer, as this will also merge names such that the alternate name is preserved.

In theory, JavLuv should have dealt with name order, so long as the websites scraped from display the name consistently. So I'm not sure why that's occurring.

I.... have no idea why that was what got quoted. I meant to quote tre11's post and I was pretty sure that was what was previewed before I clicked "Post reply". Apologies for the confusion. Hope this clears it up as I obviously am not going to start making sarcastic jabs about how software I have never even looked at the code of works.

I.... have no idea why that was what got quoted. I meant to quote tre11's post and I was pretty sure that was what was previewed before I clicked "Post reply". Apologies for the confusion. Hope this clears it up as I obviously am not going to start making sarcastic jabs about how software I have never even looked at the code of works.

No worries friend. Regarding people with temporary / swapping drives... well, I'm trying to make JavLuv accommodate the way people actually store and organize their own collections, and swapping drives are a reality for some. Personally, I use a Synology NAS, so it's pretty simple for me, and JavLuv naturally reflects my own storage preference. But I realize that's not practical for everyone.

I think I've already got an item in the to-do list to better support temporary storage in JavLuv. I interpreted that post not as a bug report, but a new feature request, and it's not the only time I've gotten a similar request.

No worries friend. Regarding people with temporary / swapping drives... well, I'm trying to make JavLuv accommodate the way people actually store and organize their own collections, and swapping drives are a reality for some. Personally, I use a Synology NAS, so it's pretty simple for me, and JavLuv naturally reflects my own storage preference. But I realize that's not practical for everyone.

I think I've already got an item in the to-do list to better support temporary storage in JavLuv. I interpreted that post not as a bug report, but a new feature request, and it's not the only time I've gotten a similar request.

I guess having some sort of visual indicator or filter for when media isn't currently available/can't be found would work for most people. It's just bizarre to me that anyone would want some of their covers to straight up disappear when the content associated with them isn't available for playback.

Does anyone have a workaround for scanning movies in these last months? I know that there is currently problem on javlibrary scraper. When I do my scan, some movies got the metadata, but some others are empty, but I got all of its cover image.

In those movies, I got all the cover image, only few got its metadata, but why the others don't get its metadata? Currently I put the info manually, but this really takes a lot of time. Maybe there are some workaround, hopefully.

In those movies, I got all the cover image, only few got its metadata, but why the others don't get its metadata? Currently I put the info manually, but this really takes a lot of time. Maybe there are some workaround, hopefully.

Does anyone have a workaround for scanning movies in these last months? I know that there is currently problem on javlibrary scraper. When I do my scan, some movies got the metadata, but some others are empty, but I got all of its cover image. View attachment 3491834

In those movies, I got all the cover image, only few got its metadata, but why the others don't get its metadata? Currently I put the info manually, but this really takes a lot of time. Maybe there are some workaround, hopefully.

Its a pain atm but I haven't found a way yet to get around the JavLibrary issue and Javlibrary now is giving me headache just loading it in my browser due to be running a VPN and Adblockers. If javlibrary even scrapes the data then the names will be reversed and a blank actress is created. Its a huge headache but not a lot we can do at the moment until tmpguy finds a work around.

Yeah it does. I usually manually add them via JavRave.club or as many variations as possible. If a blank dupe occurs then Ive found it best to merge the 2 and then a alternate of the reversed name is created rather than deleting the blank actress and adding the reversed name manually as ofc you know better then meActresses are notoriously difficult for JavLuv to manage, due to the nature of the Japanese language, and how there isn't really an exact English name for a given Japanese name. Add to this the issue that actresses sometimes change names throughout their careers (some have many aliases), two possible name orderings, and the problem grows even more tricky. Hibiki Otsuki is my favorite example, as there are no less than three valid ways to spell "Otsuki".

View attachment 3490961

I attempt to alleviate this with fuzzy algorithms that employ a "closest match" in case of minor spelling differences, but I didn't want to introduce too many false positives. It works most of the time, but does sometimes require manual intervention.

If there's a name with no corresponding database entry, JavLuv will create one, or else it ends up having issues. If you delete it, it will just be recreated later. So, merging it is the correct answer, as this will also merge names such that the alternate name is preserved.

In theory, JavLuv should have dealt with name order, so long as the websites scraped from display the name consistently. So I'm not sure why that's occurring.

Its a pain atm but I haven't found a way yet to get around the JavLibrary issue and Javlibrary now is giving me headache just loading it in my browser due to be running a VPN and Adblockers. If javlibrary even scrapes the data then the names will be reversed and a blank actress is created. Its a huge headache but not a lot we can do at the moment until tmpguy finds a work around.

Just FYI, I've seen this issue as well. I've had to go and manually correct actress names in movie metadata, and it's not a lot of fun. Reversed names too, for some reason. I suspect JavLuv is more heavily relying on non-JavLibrary sites, and one of them is returning names in reverse order to what I'm suspecting. I'll double-check my actress scapers and see which one is misbehaving. If you guys have log files when you see this happening, that might help as well, if you can point out the exact movie where it happened. Otherwise I'll wait until it happens to me again.

Hi there. This is a configuration question and ultimately it is not a particular problem. I've done scans of several main folders with a total of about 20K files. When done the first couple of dozen listings show no icon/cover but there are a bunch of listings. When I clik on one of them it shows 2 or more links for that title. So there may be abc-124.mp4 and abc124.mp4 and maybe abc-124 buttpluggers anonymous.mp3. All 3 got associated with that name. The links don't give me the option to delete and since there are mutiple files associated with that file you can't click on what would be the cover and delete that way. Some of these dupes aren't strictly dupes, for example ncy-123.ts and ncy123.ts are, for the most part, dupes except the latter has trailers at the end of the video which adds about 15-30 Minutes to the video. Anyway if I go to the folder(s) with the dupes or quasi-dupes and delete all but one of the titles the javluv listings still show the 3 titles associated with the name. I tried re-running a scan in that directory but since the titles are already listed in javluv it won't re-scan or update the data. My only solution to this that I've found is to delete all of the img and nfo files from the directory and starting again with a fresh scan. I'm hoping that there is a better method for this issue. As I have said many times this program is fantastic and this is a First-World problem and one that I can live with but if there's a method to accomplish this, I would be grateful to know it. Cheers..

If Im getting this right. You are scanning your files in JavLuv and you are getting a lot of blank covers for Video IDs it doesn't recognise or no scraper for? and then you wish to delete them from JavLuv?Hi there. This is a configuration question and ultimately it is not a particular problem. I've done scans of several main folders with a total of about 20K files. When done the first couple of dozen listings show no icon/cover but there are a bunch of listings. When I clik on one of them it shows 2 or more links for that title. So there may be abc-124.mp4 and abc124.mp4 and maybe abc-124 buttpluggers anonymous.mp3. All 3 got associated with that name. The links don't give me the option to delete and since there are mutiple files associated with that file you can't click on what would be the cover and delete that way. Some of these dupes aren't strictly dupes, for example ncy-123.ts and ncy123.ts are, for the most part, dupes except the latter has trailers at the end of the video which adds about 15-30 Minutes to the video. Anyway if I go to the folder(s) with the dupes or quasi-dupes and delete all but one of the titles the javluv listings still show the 3 titles associated with the name. I tried re-running a scan in that directory but since the titles are already listed in javluv it won't re-scan or update the data. My only solution to this that I've found is to delete all of the img and nfo files from the directory and starting again with a fresh scan. I'm hoping that there is a better method for this issue. As I have said many times this program is fantastic and this is a First-World problem and one that I can live with but if there's a method to accomplish this, I would be grateful to know it. Cheers..

If this is the case. Do you want to keep the Videos? If not then go back to the main window so they all show at the top. Select the first one you want gone and and Press Shift, Keep it Pressed [Allows you to select multiples in a continuous train], Select the last one and then release shift and right click on them and select delete [Be careful, none recycle bin delete]

If you want to keep them but JavLuv wont Erase the .nfo files and so they remain [They could be set to Read only files and also if you are running a AV like Norton then cause JavLuv isn't digitally signed, it will panic when Javluv goes to delete a file and so you have to add it as an exception in Norton or Whichever AV]

A few ways you can approach this..

First select all the files you wanna forget with the previous method but don't delete, instead select Delete Meta Data.

I'm not sure on rules on Linking tbh so @SamKook is this allowed? Its safe, been using for years but its direct linking..

Still Not Working. Download: [Everything] https://www.voidtools.com/downloads/

Install. Its basically the best disk indexer that ive ever used and free. Now just type the ID of the title once its scanned your drives and then manually delete all files associated to said ID.

If you are planning on keeping the vids then its best to put them in a not scanned folder I've found.

If you are getting multiple vids under the same title, I have had this. Only way is to manually delete said .nfo file and rescan. If you are getting multiple under the same name but variations on the full title [Not Different ID] then this was done to Allow for say A B and C parts like: AUKG-001 A, AUKG-001 B. You can rename all your variation. I have several including [Upscaled] [RM-Reduced Mosaic] and [60FPS] so on....

They currently all have to reside in the same folder or you will get log alerts saying the file already exists [So the new one is skipped]. Use Everything and put in the ID. Now look at the location and sort from there or move to the same folders and then rescan the video so JavLuv Picks up on the changes.

Also when I say Rescan. Dont Regenerate Metadata unless you absolutely have to on existing videos as the scarpers Fubar at the moment with actresses, TMPguy is working on a solution. Rescan looks for changes to the actual video like added files and name changes.

Last edited:

I have I think about 90+TB across multiple drives and not all on at once. Now dont quote me on this but if you just rescan one file, all the files on non online drives with be-delisted, not gone, just delisted until the drive is back online.I guess having some sort of visual indicator or filter for when media isn't currently available/can't be found would work for most people. It's just bizarre to me that anyone would want some of their covers to straight up disappear when the content associated with them isn't available for playback.

And I agree that a indicator would be very helpful. If it works then Tmpguy could simple add a button that does a similar thing and a very easy fix

Last edited:

So, I just imported a number of new movies, and believe me, I'm also feeling the pain of the JavLibrary scraper not working properly. A few suggestions: If you see blank movies, you have two choices. You can try rescan for metadata again, and sometimes this actually works. Probably more anti-scraping measures.

If you want to keep the movie, and re-scanning doesn't work, you can still enter all the relevant data manually. It's a pain in the ass, but it's possible. Just open the page, and you can edit all the fields manually. Make sure actresses are entered first-last order. You can save a cover image to disk, and then import it from the thumbnail view.

Because I wrote JavLuv primarily for myself, I'm pretty motivated to get this working again. Hopefully I can find a working solution, but so far the solutions look fairly complicated. I've poured way too many hours of work to let it decay into unusability. Fortunately, once data is entered, you can still use it as a pretty handy browser. Be sure you do NOT re-scan your collection, because that will blow away any manually-entered data. That's kinf of a crappy design flaw, but I've got bigger fish to fry at the moment.

If you want to keep the movie, and re-scanning doesn't work, you can still enter all the relevant data manually. It's a pain in the ass, but it's possible. Just open the page, and you can edit all the fields manually. Make sure actresses are entered first-last order. You can save a cover image to disk, and then import it from the thumbnail view.

Because I wrote JavLuv primarily for myself, I'm pretty motivated to get this working again. Hopefully I can find a working solution, but so far the solutions look fairly complicated. I've poured way too many hours of work to let it decay into unusability. Fortunately, once data is entered, you can still use it as a pretty handy browser. Be sure you do NOT re-scan your collection, because that will blow away any manually-entered data. That's kinf of a crappy design flaw, but I've got bigger fish to fry at the moment.

I think the only working scraper is only from javseen. I check few movies which got its metadata and all of them are available in javseen, but the others without metadata are not available. But I don't know where the covers are saved from, because I got all the covers when I scan movies, maybe only 3-5 movies not getting the covers.So, I just imported a number of new movies, and believe me, I'm also feeling the pain of the JavLibrary scraper not working properly. A few suggestions: If you see blank movies, you have two choices. You can try rescan for metadata again, and sometimes this actually works. Probably more anti-scraping measures.

If you want to keep the movie, and re-scanning doesn't work, you can still enter all the relevant data manually. It's a pain in the ass, but it's possible. Just open the page, and you can edit all the fields manually. Make sure actresses are entered first-last order. You can save a cover image to disk, and then import it from the thumbnail view.

Because I wrote JavLuv primarily for myself, I'm pretty motivated to get this working again. Hopefully I can find a working solution, but so far the solutions look fairly complicated. I've poured way too many hours of work to let it decay into unusability. Fortunately, once data is entered, you can still use it as a pretty handy browser. Be sure you do NOT re-scan your collection, because that will blow away any manually-entered data. That's kinf of a crappy design flaw, but I've got bigger fish to fry at the moment.

And yes, I have done inputting metadata manually for over 100 movies and this is taking much time. It's giving me headache because I copy the information manually one by one, at least 7 to 8 information per movie. So I hope you have solution for this problem because this software is really great and really useful.

I think the only working scraper is only from javseen. I check few movies which got its metadata and all of them are available in javseen, but the others without metadata are not available. But I don't know where the covers are saved from, because I got all the covers when I scan movies, maybe only 3-5 movies not getting the covers.

And yes, I have done inputting metadata manually for over 100 movies and this is taking much time. It's giving me headache because I copy the information manually one by one, at least 7 to 8 information per movie. So I hope you have solution for this problem because this software is really great and really useful.

From my internal tests, Jav.land and JavSeen.tv are still working. At the moment, the two best sources, JavLibrary.com and JavDatabase.com appear to be blocking scrapers, and so are not working.

I don't think this is going to be an easy fix. I've long worried about this, but they've put tech in place specifically designed to block automatic scrapers like JavLuv (the "verifying you are a human" check you see when visiting using your). I'm hoping there's some workaround, but I can't guaranteed it. I'll keep folks posted on any progress.

On my side, I'm using VPN and I can only access javdatabase dan javseen without being asked captcha "verifying you are human". Javlibrary and javland asked the captcha. I tried to complete the captcha on my browser and rescaning the movies without any difference.From my internal tests, Jav.land and JavSeen.tv are still working. At the moment, the two best sources, JavLibrary.com and JavDatabase.com appear to be blocking scrapers, and so are not working.

I don't think this is going to be an easy fix. I've long worried about this, but they've put tech in place specifically designed to block automatic scrapers like JavLuv (the "verifying you are a human" check you see when visiting using your). I'm hoping there's some workaround, but I can't guaranteed it. I'll keep folks posted on any progress.

On my side, I'm using VPN and I can only access javdatabase dan javseen without being asked captcha "verifying you are human". Javlibrary and javland asked the captcha. I tried to complete the captcha on my browser and rescaning the movies without any difference.

To clarify, JavDatabase is using a slightly different mechanism. When I perform a search query by ID in JavLuv, the page returns a notice saying that cookies and Javascript must be enabled, which is not present in a simple HTTP query like JavLuv uses. I think there's a way to fake this, and at least get JavDatabase working again. JavLibrary might be a bit tougher, as it's got an actual human verification page with a Javascript-based redirector, which I'd need a more sophisticated mechanism to bypass.

So, some positive news: I made a simple test app embedding a fully featured browser component, and was able to successfully parse JavLibrary and JavDatabase websites. Now upcoming work will involve figuring out how to re-work the scraping mechanism to replace the web parser in JavLuv. I'm also hoping to display any captcha-like site that needs human interaction before proceeding to the main site, like JavLibrary occasionally requires.

I'm working on this mostly on weekends, so not sure how long it will take to finish... maybe a few weeks? I'll keep you guys posted.

I'm working on this mostly on weekends, so not sure how long it will take to finish... maybe a few weeks? I'll keep you guys posted.