Post your JAV subtitle files here - JAV Subtitle Repository (JSP)★NOT A SUB REQUEST THREAD★

- Thread starter Eastboyza

- Start date

-

Akiba-Online is sponsored by FileJoker.

FileJoker is a required filehost for all new posts and content replies in the Direct Downloads subforums.

Failure to include FileJoker links for Direct Download posts will result in deletion of your posts or worse.

For more information see this thread.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

try this one https://github.com/voun7/Video_Sub_ExtractorI have always wanted to be able to extract hard-coded subs from JAV movies but until now I had been unsuccessful. I found a Simplified Tutorial or as I call it a "How to for Dummies"! The link to this tutorial is: https://www.videoconverterfactory.com/tips/extract-hardcoded-subtitles.html#1

I have only tried it on one video with English Subs hard coded in it, so not positive it will always work but it looks promising. I am posting the raw Sub for DVDES-626, but as you can see it could still use some editing help. Also I have not tried the procedure on Chinese subs, also it would take an extra step to translate the result. Anyway my first "success" with extracting hard coded subs.

DVAJ-517 Nanami Kawakami Tempts Her Neighbor Who Lives Across Her Window

I've continued to experiment with the combination of Whisper and AI.

I wanted to make a quality check, so I selected this one which had a Chinese sub as well as a sub from JAVENGLISH. I did a scene by scene comparison and the whsiper+ai showed much better result than both of the other subs. Well, one sample dosen't mean much, but I hope it is a promising path.

Attachments

SONE-604 I Was Left In The Care Of My Uncle As A Teenager, And While I Felt Disgusted, He Licked My Body And Screwed Me.

I was using DeepL cause it was mostly free, but I'm now using deepseek which feels so much better. It's so much cheaper than other models, specially now they they are doing 75% off-peak discount prices. Something like this would probably cost less than €0.01. Only thing I notice is that you can ask deepseek to translate the same file 2 times and it will sometimes give a more correct translation. No idea if other models do that as well, not too bothered about testing them as they are way more expensive. And this quality is good enough anyway.

There were very minor corrections, 4 in total I think, and I like to keep in terms like Ojisan, Hentai, Lolicon, Oppai, ... (which you can ask the model to do)

Attachments

Last edited:

MIRD-216 Bored In The Countryside, Summer Vacation Made Creampie

Miu Arioka ,Ruka Inaba, Sachiko, Maria (Hana) Himesaki

Attachments

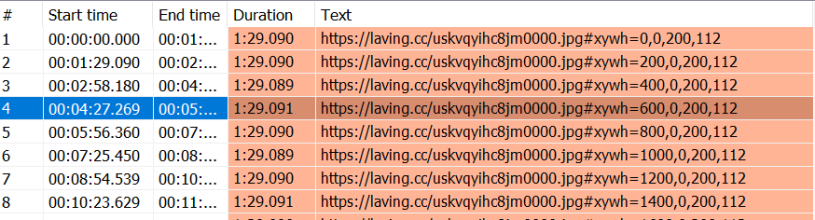

Anyone know to convert this kind of subtitles (WebVTT based thumbnails) ?

If you look at the duration, every single sub seem to be at 1:29 .09x length. You can also see that the url pointers are incremented by constant values. I think it indicates that this vtt is a bogus or a dummy filler than a viable subtitle file.

Which makes me wonder how the streaming server picks up the correct subs at time of playback.

I was using DeepL cause it was mostly free, but I'm now using deepseek which feels so much better. It's so much cheaper than other models, specially now they they are doing 75% off-peak discount prices. Something like this would probably cost less than €0.01. Only thing I notice is that you can ask deepseek to translate the same file 2 times and it will sometimes give a more correct translation. No idea if other models do that as well, not too bothered about testing them as they are way more expensive. And this quality is good enough anyway.

Thanks for the learning. I didn't know a second pass would make deepseek's translation better. Would the second pass be after the entire subs are once finished, or is it right after each sub?

About the off-peak, how's your experience with the response time? I tried to do a long srt file during the off-peak period, but the reponse time was more than a minute for each call. I wonder if there is something wrong with my code or is the off-peak really that busy.

I wasn't talking about doing a second pass with deepseek, just doing "one pass" twice and getting different results.Thanks for the learning. I didn't know a second pass would make deepseek's translation better. Would the second pass be after the entire subs are once finished, or is it right after each sub?

About the off-peak, how's your experience with the response time? I tried to do a long srt file during the off-peak period, but the reponse time was more than a minute for each call. I wonder if there is something wrong with my code or is the off-peak really that busy.

Dunno if you are writing your own code, but with subtitle edit the response time seems incredibly slow yeah, not sure what's going on.

I've been now using GPTSubtitler.com (you can choose tons of models) and the response time seems normal (in line with their chat response time). I'm not a fan of their pricing and plans, I'm pretty sure they cheat you on amount of tokens used. I've been using the site but chose to use my own API key.

Why I like it is cause they have a great pre-configured default prompt that tells the models to do context checking. If you use R1 deepseek thinking model you can really see how it operates. They also have a sort of 2nd pass option, but I tried it once and I wasn't a fan at all. Even if you're using your own code, I'd have a look at their prompt.

I'm now testing Diarization with whisper. With a prompt like that I assume that it would benefit from differentiating the speakers and understand the context even better.

Last edited:

I'm now testing Diarization with whisper. With a prompt like that I assume that it would benefit from differentiating the speakers and understand the context even better.

I hadn't considered GPTSubtitler.com. I just checked them out --it does look quite decent and transparent on the surface.

I have adopted this repo for for my script: chatgpt-subtitle-translator.

Getting the names and the pronouns right is a pain. Diarization is a good thinking. In case you're interested (and planning to use pyannote) there is a Japanese fine tuned version that imo is more decent than the stock version: transcribe_japanese_with_diarization.py

I gave it a try on a small sample, and it's only seeing 1 speaker while there should be 3.I hadn't considered GPTSubtitler.com. I just checked them out --it does look quite decent and transparent on the surface.

I have adopted this repo for for my script: chatgpt-subtitle-translator.

Getting the names and the pronouns right is a pain. Diarization is a good thinking. In case you're interested (and planning to use pyannote) there is a Japanese fine tuned version that imo is more decent than the stock version: transcribe_japanese_with_diarization.py

I even configured a number of speakers to 3 and upped the sensitivity and still only saw 1 speaker. Tried it 3 times and 1 time it saw 2.

I'll give it another go on Linux in SWL so I can use ROCm, cause atm I'm on AMD and transcription is sooo slow. But that's a project that might take a bit of time, since I'm not too well versed in Linux.

With the standard pyannote I had no problems, diarization was working correctly, but I didn't see much an improvement. I need to test it on one where it get's all the pronouns wrong, but deepseek does a great job at getting it right or just skirting around the problem of using pronouns.

It is a type of html subtitle, the coordinates will point to the exact position of the text on the existing image.If you look at the duration, every single sub seem to be at 1:29 .09x length. You can also see that the url pointers are incremented by constant values. I think it indicates that this vtt is a bogus or a dummy filler than a viable subtitle file.

Which makes me wonder how the streaming server picks up the correct subs at time of playback.

try this https://ai-subtitle-translator.netlify.app or this https://translator.mitsuko.web.idI hadn't considered GPTSubtitler.com. I just checked them out --it does look quite decent and transparent on the surface.

I have adopted this repo for for my script: chatgpt-subtitle-translator.

Getting the names and the pronouns right is a pain. Diarization is a good thinking. In case you're interested (and planning to use pyannote) there is a Japanese fine tuned version that imo is more decent than the stock version: transcribe_japanese_with_diarization.py

I love how the mitsuko is designed. I'm gonna try it right away.

Thanks !!!

Last edited:

SNIS-625 Tsukasa Aoi's first ever Omarashi [golden shower]

I saw the news that the new Scribe model has shown the best transcription results.

I wanted to give it a try.

A bit of mishap

Attachments

I finally integrated the "best" of Sextb's liberated sub into my last URE-014 Sub! I think I'm finally done with subbing URE-014! Anyway here is my "final" rev for URE-014! Enjoy and let me know if it was worth it!

Attachments

Last edited:

Thank you Chuckie! I love Manga inspired JAV films and URE-014 is one of the best!I finally integrated the "best" of Sextb's liberated sub into my last URE-014 Sub! I think I'm finally done with subbing URE-014! Anyway here is my "final" rev for URE-014! Enjoy and let me know if it was worth it!

Speaking of Manga inspired JAVs, have you seen the new URE-121 film coming soon with Jav Milf Ririko Kinoshita? Its also based on a Manga where an office wife is having an affair with a younger guy behind her husband's back. It's called "My relationship with Mrs. Fujita". I have not been able to find the Manga translated in English, but I did find a Spanish translation and it reads HOT!  Release date is March 7. I love JAV MILFS!

Release date is March 7. I love JAV MILFS!

MTALL-144 Kana Yura - A college student, treated like a sex doll, is defiled in broad daylight when a man invades through her window and inserts his penis while she sleeps.

My "100" lines test I expected to be fairly quick turned out to be way harder than expected. I figured there wouldn't be much dialog with a girl sleeping basically the whole movie but I should have figured the guy would be whispering the whole time and whisper is not great at catching low volume speech so I ended up having to cut 133 audio files with boosted audio I had to feed to whisper one by one to try and figure out what it first missed.

I used the Pro version of the whisperwithVAD colab with identical settings(the default) for both whisper versions and then used normal python whisper with the large-v2 model for the audio files I then manually extracted and amplified the audio of(10-20db).

The fully translated by whisper version is 109 lines.

The transcription only(Japanese text) version of whisper is 168 lines(I expected it would contain duplicates and hallucinations, but nope, just a handful of sound translated as speech and that's it).

The manually processed(aka me translating with AI and adding lines) whisper transcription ended up being 279 lines after removing the commented ones, so about triple what I expected, lol.

What I ended up doing is translating line by line with Gemini(the google AI) and the result was surprisingly good. The biggest problem by far was whisper either not picking up lines or transcribing them wrong. I had to sound it out in romaji to the AI myself for many of them and ask it to find me something close that made sense, which worked well most of the time.

There's a few lines that I had to guess more than I would have liked and some that were translated a bit awkwardly, but overall I'm very pleased with the result, I consider it good enough to be worth the effort even with my extremely limited Japanese knowledge.

The AI helps a lot for translating and speeds things up quite a bit compared to fully doing this manually with a low Japanese understanding.

Translating the whole movie ended up taking roughly 36 hours(not counting the initial whisper transcription) and another 4 hours to retime the whole subtitle file, do some light editing and quality check it.

If anyone is curious about comparing the results, MTALL-144_comparison_subs.zip contains the full whisper translation(MTALL-144_whisper.en.srt), the whisper transcription I used as the base(MTALL-144_whisper.jp.srt) and a .ass that contains the full whisper translation displayed on top of the video and the final manual translation displayed at the bottom(MTALL-144_comparison.ass).

For those who don't care and just want the sub, skip to here and read below:

For the proper version, there's both an .srt and .ass version in MTALL-144_manual_subs.zip . The only difference is that the .ass contains some commented line I either decided not to display or couldn't figure out if they were dialog or gibberish and it also makes use of the actor function to display who is saying which lines(which doesn't matter at all for playback, only help keep track easier when editing).Attachments

Last edited:

I used the Pro version of the whisperwithVAD colab with identical settings for both whisper versions and then used normal python whisper with the large-v2 model for the audio files I then manually extracted and amplified the audio of(10-20db).

The fully translated by whisper version is 109 lines.

The transcription only(Japanese text) version of whisper is 168 lines(I expected it would contain duplicates and hallucinations, but nope, just a handful of sound translated as speech and that's it).

The manually processed(aka me translation and adding lines) whisper transcription ended up being 279 lines minus the commented ones, so about triple what I expected, lol.

Thanks @SamKook , very good test report. I plan to look into it during the weekend.

Quick question: is your source from streaming sites, or sukebei? I plan to use the same source.

Similar threads

- Replies

- 75

- Views

- 60K

- Sticky

- Replies

- 2K

- Views

- 2M

- Replies

- 184

- Views

- 36K

- Replies

- 42

- Views

- 8K

- Replies

- 485

- Views

- 426K