I haven't analyzed any of the subs I created with it in much detail, just messing around a bit with it from time to time so couldn't tell you how often it does it. I just tested to see if it supported dts audio from a bluray and the very first line had that issue.

If you read the limitations from the model-card.md file in the github repository, it says this about that behavior:

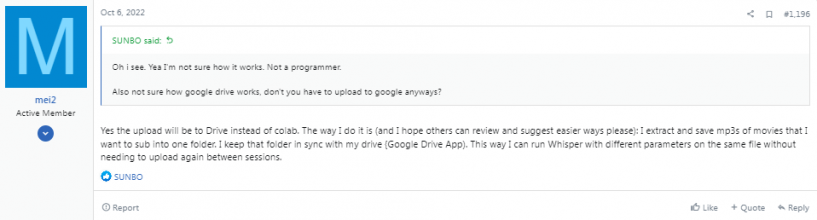

So as long as the dialog is generic enough, it shouldn't be too much of an issue I would imagine, it shouldn't mess up too much event if it invents stuff. Also, nobody here is doing professional work, it's just fans doing their best so nobody expects perfection.

Also wether the lines repeat or not always seemed random to me. When I ran the same audio file 4 times in a row to first test it, the first result was the best and the amount of repeated line varied wildly. Today, it was the opposite with 2 tries on that english audio.

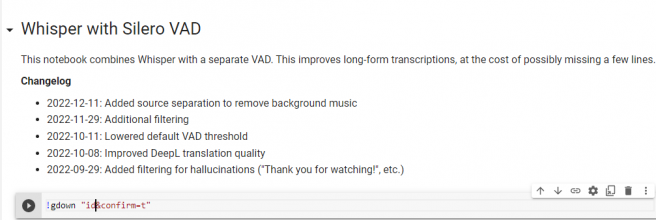

If you read the limitations from the model-card.md file in the github repository, it says this about that behavior:

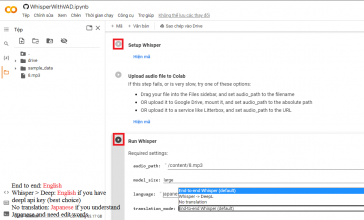

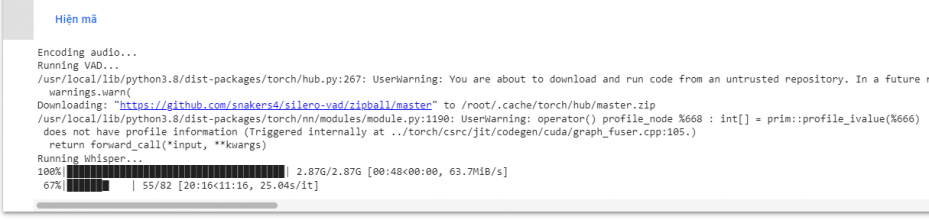

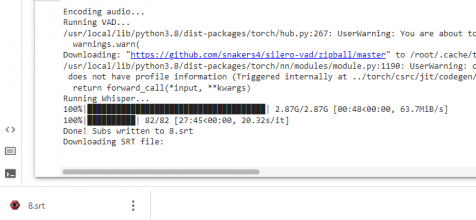

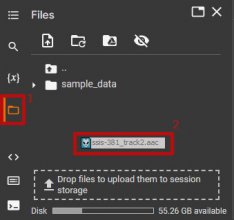

Code:

However, because the models are trained in a weakly supervised manner using large-scale noisy data, the predictions may include texts that are not actually spoken in the audio input (i.e. hallucination). We hypothesize that this happens because, given their general knowledge of language, the models combine trying to predict the next word in audio with trying to transcribe the audio itself.So as long as the dialog is generic enough, it shouldn't be too much of an issue I would imagine, it shouldn't mess up too much event if it invents stuff. Also, nobody here is doing professional work, it's just fans doing their best so nobody expects perfection.

Also wether the lines repeat or not always seemed random to me. When I ran the same audio file 4 times in a row to first test it, the first result was the best and the amount of repeated line varied wildly. Today, it was the opposite with 2 tries on that english audio.