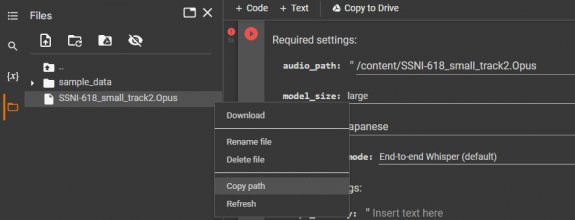

I got curious so I looked up MIAE-208. It looks like a good movie --good choice. One thing that might help you with your work is to use an algorithm like Whisper to produce a baseline transcription with timestamps. Then use the baseline to layer (your) human translation on it. I'm guessing that can speed up the work quite a bit.

As I'm writing this I started thinking that I can just run the baseline right away and can pm you that version. Will be fun.

I don't know how to use Whisper, sounds really complicated, and it sounds really GPU heavy (the notebook I use to translate this is not that good at all) but If I managed to get it working yes it could theorically speed up my work by a significant margin.

The translation is very easy to me, they speak cleary and close to the microphone so I can tell with 100% accuracy what they are saying. I've translated about 50 minutes of the movie and only had trouble with one specific Sora Shiina line that I couldn't hear because the girls were laughing, so I had to use machine translation on that line and it also didn't helped much, so I had to improvise something that made sense.

My main problem with MIAE-208 is that is a 3h20m dialogue heavy movie (the girls talk every 3 seconds even when having hardcore sex) - every NTR movie is like that because of their gimmick. So it takes a looong time to translate because I have to make the timestamps too.