Post your JAV subtitle files here - JAV Subtitle Repository (JSP)★NOT A SUB REQUEST THREAD★

- Thread starter Eastboyza

- Start date

-

Akiba-Online is sponsored by FileJoker.

FileJoker is a required filehost for all new posts and content replies in the Direct Downloads subforums.

Failure to include FileJoker links for Direct Download posts will result in deletion of your posts or worse.

For more information see this thread.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

It makes sense in a sentence, but does it make sense in a scenario? In my experience, the sentence makes sense with the scenario. I am not saying that the whisper is perfect and will transcribe all the words correctly and accurately. The important thing is that the context of the scenario or story is there.

My question is, how often does Whisper invent stuff based on your experience?

Thank you for your input about Whisper inventing stuff, I will post it here if ever I encounter that scenario.

My question is, how often does Whisper invent stuff based on your experience?

Thank you for your input about Whisper inventing stuff, I will post it here if ever I encounter that scenario.

Hi everyone.

Finally, after been reading this thread for quite a long time, I'm in the position to start giving back.

I always wanted to translate JAV but because I have no knowledge at all of Japanese language it was nearly imposible for me to get some results with a minimun of quality ( imo ) further that a google translation, which in many times, the result was nearly a comedy in my experience.

In the last few days that I was catching up with the thread I discovered the Whisper ( thanks to everyone involved in bringing it up to this forum ) and last night I tried my very first translation with a movie I always was courious about.

I have noticed that there are some lines missing and at some points, the trtanslation is not accurate, but still, is a great move foward to get something with quality.

My translation is a work in progress, and I invite everyone who can improve it to do so. My intention is to provide "a base file", so people who actually can provide quality subs can work with them and fill the blanks faster that having to do the whole proccess from the beginning.

So from now on, everyone who knows more about, fell free to take "my" files and make them better so the comunity can enjoy them.

Have a great day guys, thanks to Whisper, a new shiny era is starting.

Finally, after been reading this thread for quite a long time, I'm in the position to start giving back.

I always wanted to translate JAV but because I have no knowledge at all of Japanese language it was nearly imposible for me to get some results with a minimun of quality ( imo ) further that a google translation, which in many times, the result was nearly a comedy in my experience.

In the last few days that I was catching up with the thread I discovered the Whisper ( thanks to everyone involved in bringing it up to this forum ) and last night I tried my very first translation with a movie I always was courious about.

I have noticed that there are some lines missing and at some points, the trtanslation is not accurate, but still, is a great move foward to get something with quality.

My translation is a work in progress, and I invite everyone who can improve it to do so. My intention is to provide "a base file", so people who actually can provide quality subs can work with them and fill the blanks faster that having to do the whole proccess from the beginning.

So from now on, everyone who knows more about, fell free to take "my" files and make them better so the comunity can enjoy them.

Have a great day guys, thanks to Whisper, a new shiny era is starting.

Attachments

I haven't analyzed any of the subs I created with it in much detail, just messing around a bit with it from time to time so couldn't tell you how often it does it. I just tested to see if it supported dts audio from a bluray and the very first line had that issue.

If you read the limitations from the model-card.md file in the github repository, it says this about that behavior:

So as long as the dialog is generic enough, it shouldn't be too much of an issue I would imagine, it shouldn't mess up too much event if it invents stuff. Also, nobody here is doing professional work, it's just fans doing their best so nobody expects perfection.

Also wether the lines repeat or not always seemed random to me. When I ran the same audio file 4 times in a row to first test it, the first result was the best and the amount of repeated line varied wildly. Today, it was the opposite with 2 tries on that english audio.

If you read the limitations from the model-card.md file in the github repository, it says this about that behavior:

Code:

However, because the models are trained in a weakly supervised manner using large-scale noisy data, the predictions may include texts that are not actually spoken in the audio input (i.e. hallucination). We hypothesize that this happens because, given their general knowledge of language, the models combine trying to predict the next word in audio with trying to transcribe the audio itself.So as long as the dialog is generic enough, it shouldn't be too much of an issue I would imagine, it shouldn't mess up too much event if it invents stuff. Also, nobody here is doing professional work, it's just fans doing their best so nobody expects perfection.

Also wether the lines repeat or not always seemed random to me. When I ran the same audio file 4 times in a row to first test it, the first result was the best and the amount of repeated line varied wildly. Today, it was the opposite with 2 tries on that english audio.

Haha - you picked up a very very difficult movie to translate. I watched it last night. There are way too many cross-talks and parallel dialogues. It's a fun movie --nasty friends Sora-san hasI translated 1/3 of MIAE-208.

Tiresome, but feeling pretty good about the results.

I'll finish in about a week.

As with most transcribe/translation software using it as a base is the best options. As we all stated anything that supply time code and even some subs, it a good start.Hi everyone.

Finally, after been reading this thread for quite a long time, I'm in the position to start giving back.

I always wanted to translate JAV but because I have no knowledge at all of Japanese language it was nearly imposible for me to get some results with a minimun of quality ( imo ) further that a google translation, which in many times, the result was nearly a comedy in my experience.

In the last few days that I was catching up with the thread I discovered the Whisper ( thanks to everyone involved in bringing it up to this forum ) and last night I tried my very first translation with a movie I always was courious about.

I have noticed that there are some lines missing and at some points, the trtanslation is not accurate, but still, is a great move foward to get something with quality.

My translation is a work in progress, and I invite everyone who can improve it to do so. My intention is to provide "a base file", so people who actually can provide quality subs can work with them and fill the blanks faster that having to do the whole proccess from the beginning.

So from now on, everyone who knows more about, fell free to take "my" files and make them better so the comunity can enjoy them.

Have a great day guys, thanks to Whisper, a new shiny era is starting.

You're not wrong. I just tried it and it seems to be a problem here too.How are you physically uploading the file? I have done it by moving the file into the frame on the left, and I have also done it by hitting the upload button then navigating its file path. Both with the same result: the file appears at the bottom of the window, not in the file list like it is in yours. And you can't copy the path. It says they are in "session storage."

I could run python for it, but I rather stick to my old method

Yeah, I'll take easier ones after this one, hahahaha.Haha - you picked up a very very difficult movie to translate. I watched it last night. There are way too many cross-talks and parallel dialogues. It's a fun movie --nasty friends Sora-san has

I've been using Audacity to extract the MP4s into WAV files and running that through whisper.

Here is the CLI command I'm using:

Here is the CLI command I'm using:

Code:

whisper "MovieName.wav" --language Japanese --task translate --device cuda --model medium --no_speech_threshold=0.5 --logprob_threshold=-0.5

Last edited:

BTW does anyone here use Arctime Pro for subtitle work? It has an intutive interface with a bunch of nifty features. One of the Akiba members (@MrKid ) recommended that to me some time ago, and since then I have been a fan of Arctime. The website is in Chinese but the app itself has an English menu: https://arctime.cn/download-arctime.html.

Do you not like Whisper? Which one is better for you?BTW does anyone here use Arctime Pro for subtitle work? It has an intutive interface with a bunch of nifty features. One of the Akiba members (@MrKid ) recommended that to me some time ago, and since then I have been a fan of Arctime. The website is in Chinese but the app itself has an English menu: https://arctime.cn/download-arctime.html.

Try the whisper+vad version, this collaboration removes the repeated lines.Below is a sample of transcription from Whisper. I clean it up a little bit with the pronouns like I/you, he/she, etc. I also transcribed the same movie in Capcut and the result is similar in meaning. If someone wants to review if the transcriptions are correct or wrong, then just message me so I can give you the file.

I believe that the Whisper transcriptions are correct because they convey similar meanings after testing the same movie with Capcut. In addition to that, the transcription matches the scenario of the scene.

If the transcription results of whisper did not satisfy you after using it, then maybe it satisfied someone else. Just don’t expect too high that the result is close to human transcriptions.

And to those who are getting repeated lines, it happened to me too. The solution to that is to run the same file again, maybe there are some errors since I am running the whisper with the web version. After running it again, the lines don’t repeat anymore, and you can compare your first result to the second one. Also, I am converting the video file to MP3 with the highest volume possible in the converter. I don’t know if this will work for you, but it worked for me.

The sample below is a 41-minute video that generated 600+ lines.

View attachment 3127707

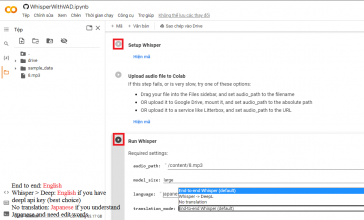

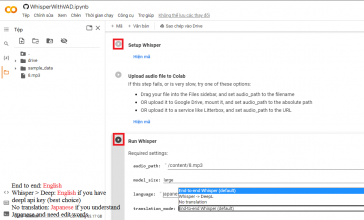

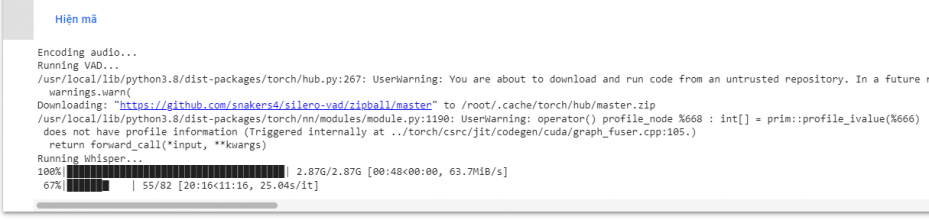

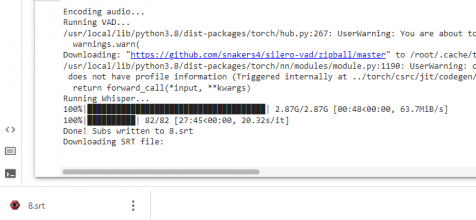

Recently, people are interested in whisper as subtitles and encountered some errors when installing.

I will guide you to install Whisper with VAD on colab. (no need to install python, git, pytorch... If you want to install whisper python see Post #4,513 by @SamKook)

colab.research.google.com

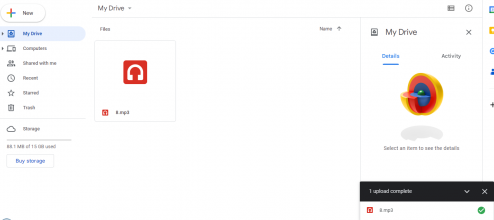

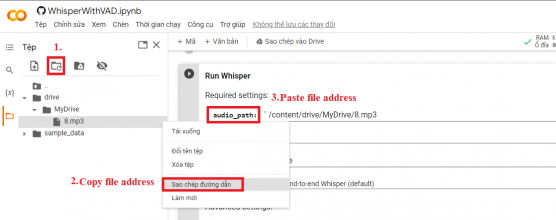

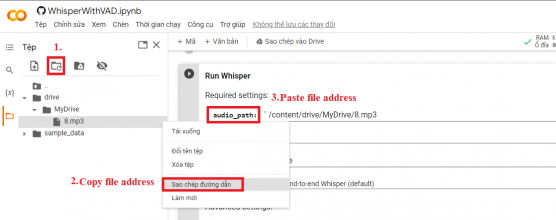

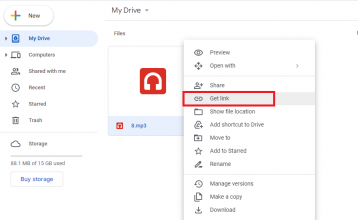

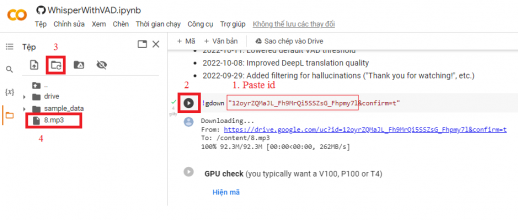

-Upload the mp3 file to colab. (many of you fail at this step)

colab.research.google.com

-Upload the mp3 file to colab. (many of you fail at this step)

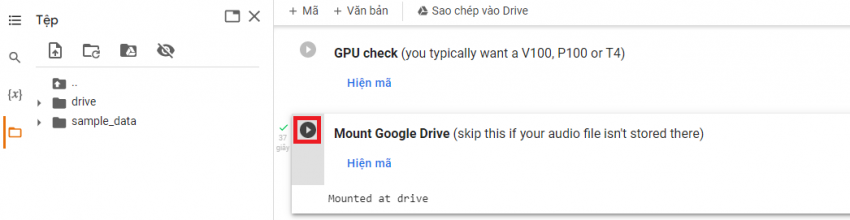

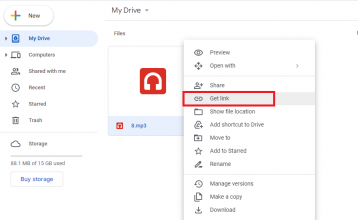

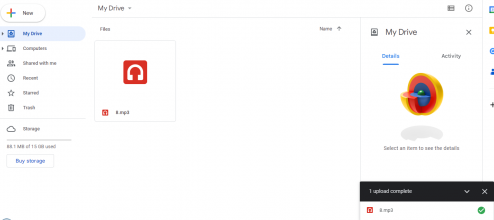

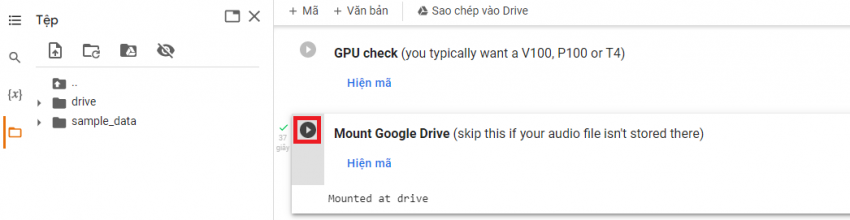

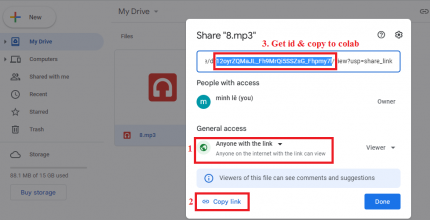

Method 1: Mount Google Drive

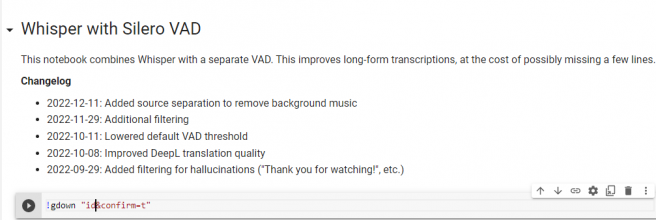

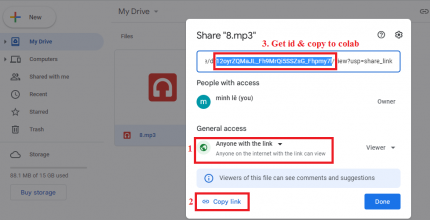

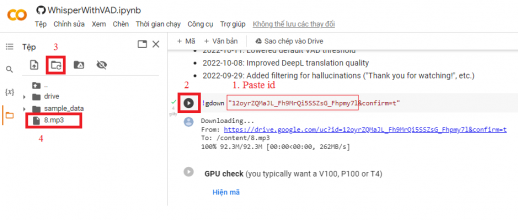

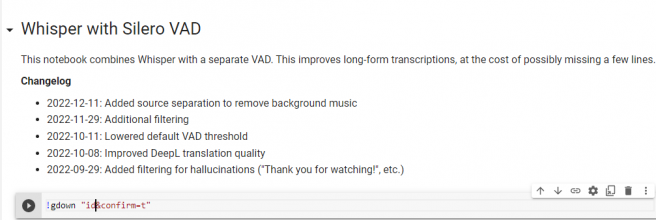

Method 2: Add the command !gdown "id&confirm=t"

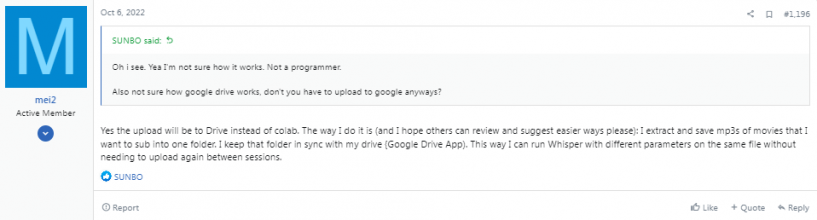

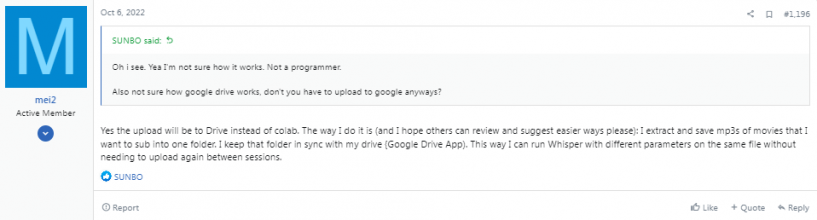

Method 3: Install Google Drive App refer to @mei2

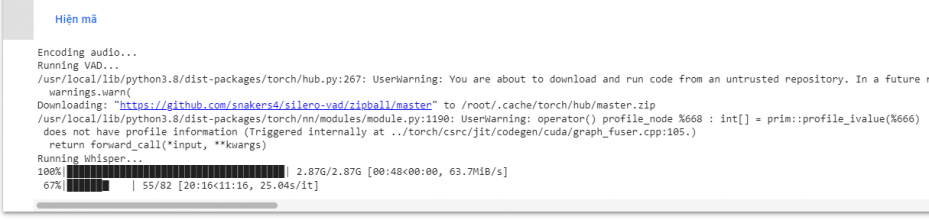

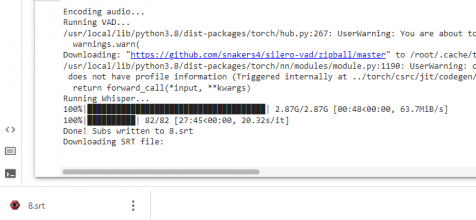

And this is the result:

I will guide you to install Whisper with VAD on colab. (no need to install python, git, pytorch... If you want to install whisper python see Post #4,513 by @SamKook)

Google Colab

Method 1: Mount Google Drive

Method 2: Add the command !gdown "id&confirm=t"

Method 3: Install Google Drive App refer to @mei2

And this is the result:

Attachments

Last edited:

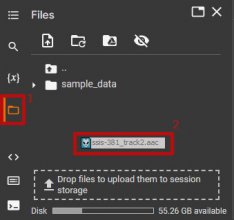

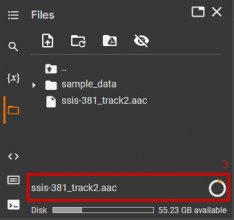

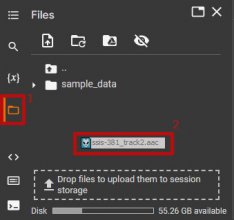

Since MrKid didn't mention this method, here's exactly how I did the drag and drop method, for those having difficulties, if you want to make sure the issue is something else.

0. DO NOT run the Mount Google Drive step.

1. Click the folder icon to open the Files column on the left of the webpage.

2. Drag your audio file on an empty spot in that column(make sure there's enough free space written at the bottom to store your file).

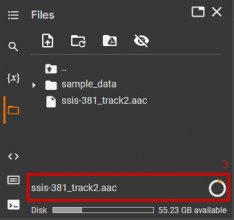

3. Wait for the file to finish uploading. The orange in the circle is the progress and once it's completed, the filename at the bottom will disappear.

Then you put the audio filename in the input(it's what the page says to do) or copy it like I did in my previous post which is how I did it.

0. DO NOT run the Mount Google Drive step.

1. Click the folder icon to open the Files column on the left of the webpage.

2. Drag your audio file on an empty spot in that column(make sure there's enough free space written at the bottom to store your file).

3. Wait for the file to finish uploading. The orange in the circle is the progress and once it's completed, the filename at the bottom will disappear.

Then you put the audio filename in the input(it's what the page says to do) or copy it like I did in my previous post which is how I did it.

Last edited:

I use Arctime for editing subs that are produced by Whisper. Similar to SubtitleEdit, Arctime does have its video-to-text sub generator module, but I have not used it.Do you not like Whisper? Which one is better for you?

Yes. I see.I use Arctime for editing subs that are produced by Whisper. Similar to SubtitleEdit, Arctime does have its video-to-text sub generator module, but I have not used it.

I really like Aegisub thanks to Imscully recommendation. The builds by some in the anime community has been very useful.

Thank you for trying to help.Recently, people are interested in whisper as subtitles and encountered some errors when installing.

I will guide you to install Whisper with VAD on colab. (no need to install python, git, pytorch... If you want to install whisper python see Post #4,513 by @SamKook)

-Upload the mp3 file to colab. (many of you fail at this step)

Google Colab

colab.research.google.com

I think "many" are failing with either method not because 100% user error.

Personally, I think it has to do with Whisper itself. It simply doesn't work for everyone.

Most software are like that.

I suspect Whisper will just be another software that won't be user friendly to the masses and fade into obscurity.

Wasn't there another software, that was talk about a lot here? 5-6 months ago?

Many wanted to try it, but it wasn't user friendly? What happen to that one? No it's not capcut

But anyways good luck to those using it. I don't see Whisper adding the increase to subs here.

We still got many subbers here like Chuckie, Imscully, myself and the rest.

Praise the subbers and appreciate them please.

P.S. Maybe more funding will make Whisper better, hopefully.

Last edited:

I don't want to be mean since not everyone is good with computers, but whisper is actually very easy to install.

What's most likely happening to most people who failed is they did a small mistake somewhere because they're not familiar how command line works or they needed administrator rights or they didn't select the add python or git to the PATH environment variable during the installation or something else like that and it ends up not working.

Since nobody gave me enough information here to actually diagnose the installation problem they were having or created a thread in the tech support section of the forum, who knows where you're all failing.

Here's a quick guide with the required steps which will hopefully help some(just tested it in a fresh win11 VM to make sure it really is that simple, but I can't test the usage from that fresh install since the VM doesn't see my GPU):

1. Whisper works with python 3.7 to 3.10(not 3.11 yet which is currently the latest) so go to python.org, download python 3.10.9(or whatever 3.10 version is the current one when you read this), just scroll down a bit in the downloads page to find a download link for it. Most will want the "Windows installer (64 bit)" version.

When you install it, you can use the default everything but make sure to check "add python.exe to PATH" to launch python from any folder. Extending the character limit at the end isn't a bad thing either.

2. Install git from git-scm.com and use the default except where it ask to install it to PATH which you want.

3. Open a command line window(open start menu and type cmd) and type the following in it and press enter

Code:pip install git+https://github.com/openai/whisper.git

Type whisper in that same command line to make sure it really worked(should type a bunch of stuff like the usage help and an error that says missing audio) and that's it, whisper is installed.

By default, it'll likely only work with your cpu so if you see it says it uses the cpu(gives an error cpu doesn't support FP16 when it starts so easy to tell) but if you have an nvidia gpu, then there's 2 extra steps(or just do the extra step 2 before step 2 and 3 from the installation instructions above, assuming you never tried to install whisper before):

1. In the command line type the following to uninstall 3 modules whisper installed(don't know why pip has a 3 here, might not be required but I'm far from a python pro so I'm following pytorch instructions and not questioning it)

Code:pip3 uninstall torch torchvision torchaudio

2. Go to pytorch.org and on the main page, in the "INSTALL PYTORCH" section, choose stable, windows, pip, python and whichever version of cuda your gpu support, lowest one in case of doubt, copy the pip3 command it creates below and type that command in the command line.

Mine looked like this:

Code:pip3 install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu116

Then whisper should use your gpu properly instead of defaulting to the cpu. Be warned that there's a 2.4GB download or so for the cuda t

I installed step 1 python and step 2 git.but my computer is used Nvidia geforce GTX 1650 and I can't find cuda version in my program file.my computer haven't installed cuda tools kid before. so I can't installed pytorch and show error in command box . anybody know how to installed pytorch in GTX 1650 nvidia.I don't want to be mean since not everyone is good with computers, but whisper is actually very easy to install.

What's most likely happening to most people who failed is they did a small mistake somewhere because they're not familiar how command line works or they needed administrator rights or they didn't select the add python or git to the PATH environment variable during the installation or something else like that and it ends up not working.

Since nobody gave me enough information here to actually diagnose the installation problem they were having or created a thread in the tech support section of the forum, who knows where you're all failing.

Here's a quick guide with the required steps which will hopefully help some(just tested it in a fresh win11 VM to make sure it really is that simple, but I can't test the usage from that fresh install since the VM doesn't see my GPU):

1. Whisper works with python 3.7 to 3.10(not 3.11 yet which is currently the latest) so go to python.org, download python 3.10.9(or whatever 3.10 version is the current one when you read this), just scroll down a bit in the downloads page to find a download link for it. Most will want the "Windows installer (64 bit)" version.

When you install it, you can use the default everything but make sure to check "add python.exe to PATH" to launch python from any folder. Extending the character limit at the end isn't a bad thing either.

2. Install git from git-scm.com and use the default except where it ask to install it to PATH which you want.

3. Open a command line window(open start menu and type cmd) and type the following in it and press enter

Code:pip install git+https://github.com/openai/whisper.git

Type whisper in that same command line to make sure it really worked(should type a bunch of stuff like the usage help and an error that says missing audio) and that's it, whisper is installed.

By default, it'll likely only work with your cpu so if you see it says it uses the cpu(gives an error cpu doesn't support FP16 when it starts so easy to tell) but if you have an nvidia gpu, then there's 2 extra steps(or just do the extra step 2 before step 2 and 3 from the installation instructions above, assuming you never tried to install whisper before):

1. In the command line type the following to uninstall 3 modules whisper installed(don't know why pip has a 3 here, might not be required but I'm far from a python pro so I'm following pytorch instructions and not questioning it)

Code:pip3 uninstall torch torchvision torchaudio

2. Go to pytorch.org and on the main page, in the "INSTALL PYTORCH" section, choose stable, windows, pip, python and whichever version of cuda your gpu support, lowest one in case of doubt, copy the pip3 command it creates below and type that command in the command line.

Mine looked like this:

Code:pip3 install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu116

Then whisper should use your gpu properly instead of defaulting to the cpu. Be warned that there's a 2.4GB download or so for the cuda thing.

Last edited: